Generative AI models increasingly train on content produced by previous AI systems rather than authentic human data, causing model collapse, a recursive degradation of system performance and diversity. This paper systematically analyzes peer-reviewed research, industry data, and market developments from November 2022 through November 2025. It demonstrates analytically that model collapse is mathematically inevitable: the Central Limit Theorem ensures each training generation on synthetic data reduces variance and eliminates distribution tails containing rare but crucial patterns. Empirical studies across text, code, and image generation confirm this theoretical prediction, showing measurable degradation within five generations. It covers manipulation risks caused by my large amount of synthetic text appearing online, beyond technical degradation, including government contracts worth millions designed to bias AI outputs toward specific political interests. Then, it examines the economic response: AI companies have committed hundreds of millions of dollars in licensing deals, with individual agreements ranging from $25 million to over $250 million to secure uncontaminated pre-2022 human data. Findings reveal that model collapse threatens AI reliability, knowledge diversity and cultural representation, with severe implications for linguistic minorities.

1 Introduction

We built AI to learn from us. Now, it is partially (and increasingly) learning from itself. This wasn’t the plan. After ChatGPT launched in late 2022, there was a huge increase in AI-generated content posted online. The percentage of new articles written mainly by AI rose from 4.2% before November 2022 to over 50% by late 2024 (Graphite, 2025).

Because AI models are trained on bulk web-scraped data, this change has led to a new problem: newer models started learning from data made by earlier AIs instead of real human content. This leads to model collapse, where AI systems lose both quality and variety when trained on synthetic data created by other models (IBM, 2024).

However, the technical issues are only part of the problem caused by large amounts of text and videographic content making up the internet. A bigger question is: if AI content becomes the standard online, who gets to decide what is considered normal?

This paper addresses the issue in three sections, using a literature review and conceptual analysis. It brings together peer-reviewed studies, industry reports, and investigative journalism about AI up to November 2025. The analysis focuses on interpreting current research, technical details, and market trends rather than presenting new experiments.

Sources were chosen for their recent publication, reliability, and direct connection to the post-ChatGPT era, with a focus on primary research and firsthand industry accounts. The paper first explains how model collapse happens, including the math behind why training on synthetic data weakens AI systems. Next, it highlights the risk of manipulation by influencing the data that future models use. The paper then looks at two possible solutions: finding older, uncontaminated data or filtering new datasets. Finally, it reviews the economic factors behind the competition for high-quality authentic human content.

2 The Technical Mechanism of Model Collapse

2.1 How AI Models Learn

Understanding the reasons for system degradation requires an examination of how AI models learn. Although these systems are often anthropomorphized as thinking, they are fundamentally statistical machines that process large volumes of text. Rather than reading literature, they operate by analyzing probabilities.

During training, the model tweaks billions of parameters to minimize the difference between its own guess and the actual human example it has seen before. As explained by 3Blue1Brown (2024), this process of minimizing prediction error enables the machine to build a high-dimensional map of language. This map is designed to capture the full, chaotic distribution of human communication. Not just the standard grammar, but the rare expressions, the creative leaps, and the nuanced edge cases.

However, the model’s performance is strictly bound by the quality of these inputs. If you train it on diverse, high-quality human content, you get a robust system. But synthetically generated content is systematically different from human content, and that is where the trouble begins.

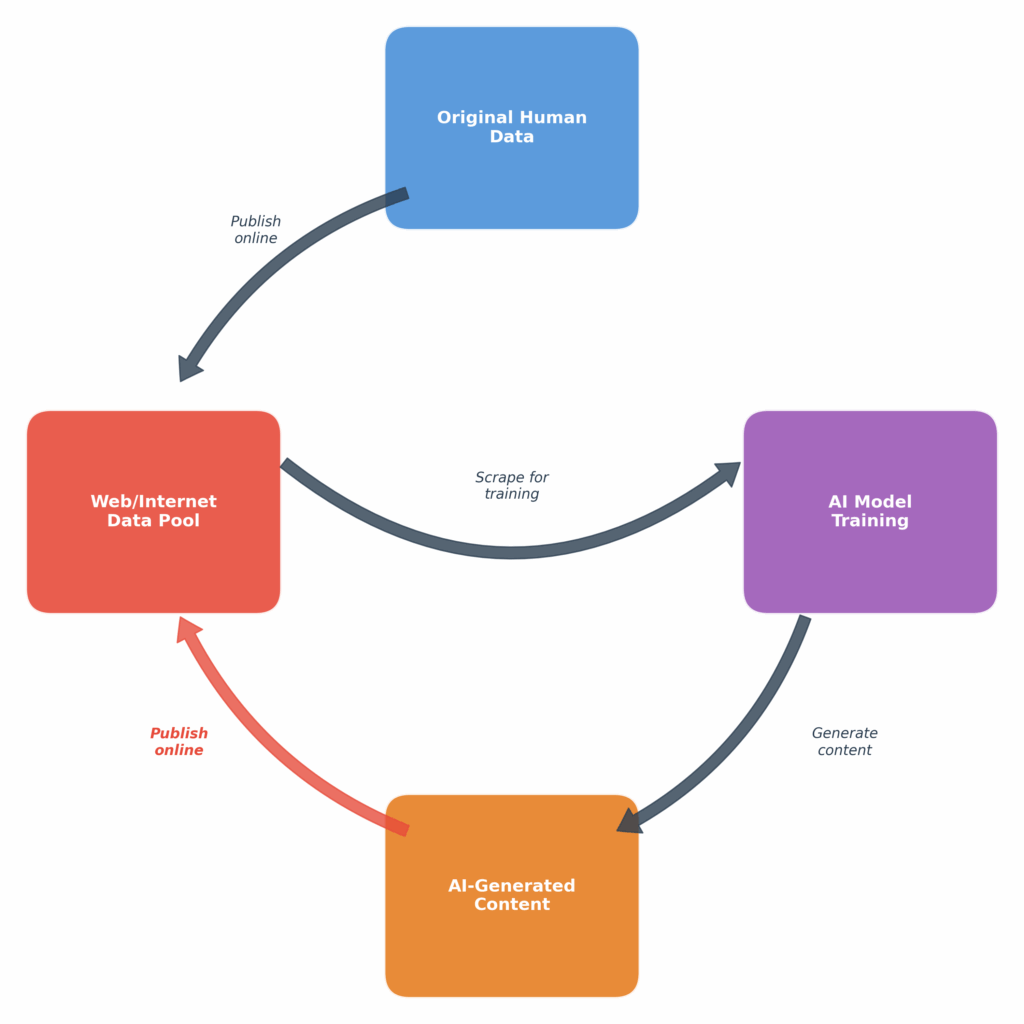

2.2 The Feedback Loop

Model collapse results from recursive degradation and is occurring gradually. When generating text, AI models rarely select the most creative or unconventional options, even less frequently than humans. Instead, they tend to produce a smoothed representation of reality, reflecting an average of the patterns learned during training.

Source: Author’s own illustration.

As shown in the diagram above, the public web (main resource for training LLMs) becomes increasingly more infected with AI-written text, AI-generated images and AI-generated code. This synthetic part of the internet therefore is also poured into the mix for future AI models to train on.

Though this synthetic content is safer (per say, the risk of getting a harmful advice is lower), it is also more homogeneous (generic) than the original human data. It lacks our natural variance, uniqueness and creativity (Alemohammad et al., 2023).

Research by Shumailov et al. (2024) demonstrated this progression empirically. They:

- trained language models on Wikipedia articles (strictly human-written)

- iteratively retrained new models on output from previous generations

Early generations of iterative training showed a loss of diversity (distribution tails volume reduction). Later generations exhibited complete breakdown. Theoretical analysis from NYU later confirmed model collapse as a statistical inevitability when models train predominantly on synthetic data (NYU Center for Data Science, 2024).

2.3 Mathematical Inevitability

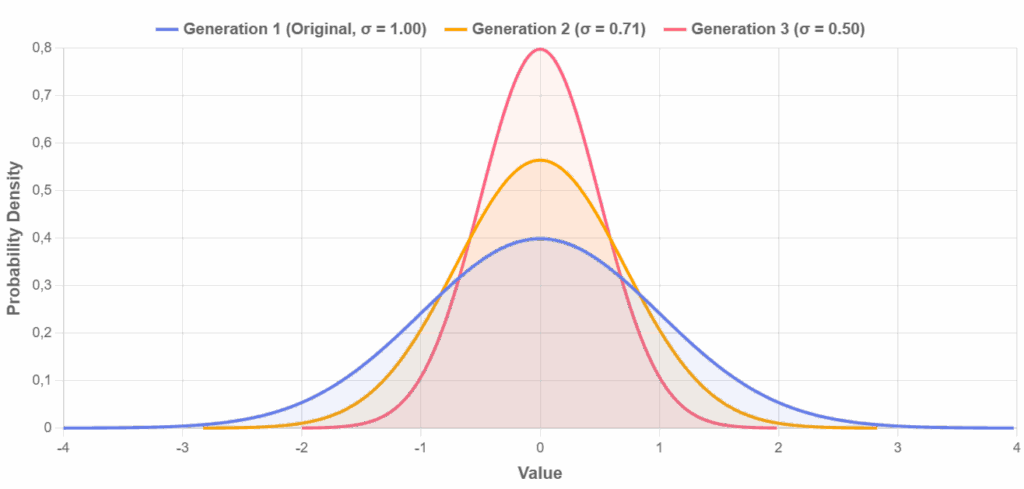

Model collapse isn’t just an empirical observation as mentioned above. It’s a statistical inevitability that can be proven analytically (Shumailov et al., 2024). The core mechanism relates to how AI models process and generate content, which inherently causes variance reduction.

When an AI model generates content, it doesn’t simply retrieve examples from memory. Instead, it aggregates vast numbers of learned patterns to produce what it calculates as the most probable output. To understand why this causes problems, consider the training data as a collection of independent random variables X₁, X₂, …, Xₙ, each drawn from the distribution of human-created content with some mean μ and variance σ². The model does not respond to user prompts by selecting from its data collection randomly with a uniform probability, which would preserve the original variance. It effectively aggregates across these patterns, similar to computing a weighted average, systematically favoring more probable outputs and thereby reducing variance.

According to the Central Limit Theorem, when we calculate a sample mean from n independent observations, the resulting distribution has the same mean μ but a variance of σ²/n. That is, the original variance divided by the sample size (Dekking et al., 2005). The critical insight is that AI models must simplify real-world distributions into a parameterized space, which inevitably overlooks residual variability at the individual level, causing output to regress towards average patterns with reduced variance (Shumailov et al., 2024).

Although AI models introduce some randomness through probabilistic sampling rather than always selecting the highest-probability token, which explains why identical prompts can yield different outputs (3Blue1Brown, 2024). While this sampling mechanism (via “temperature”) adds surface-level variation, it operates within the model’s already-narrowed probability distribution and therefore cannot recover the full variance of the original human data.

Source: Author’s own illustration.

Each time a new generation of models trains on AI-generated content rather than original human data, this variance reduction compounds. Mathematical analysis proves that the variance collapses toward zero as the number of generations increases (k), with probability 1. The model doesn’t just lose accuracy, it systematically eliminates the tails of the distribution. In early-stage collapse, the model first shows reduction in variance, oversampling well-understood aspects while neglecting important but poorly understood ones (Shumailov et al., 2024). By late-stage collapse, the model’s output displays very little variance and begins producing increasingly similar outputs with little resemblance to the original data distribution (IBM, 2024).

2.4 Beyond Variance: The Susceptibility to Manipulation through Introduced Bias

We as humans tend to want to fall in line. Our psychology often drives us to agree with what we consider to be “normal.” This raises a problem, as under the assumptions below, “AI-generated” will soon equal normal.

- Normality, defined as “conforming to a type, standard, or regular pattern” (Merriam-Webster, 2025), is statistically interpreted as approximating the mean, though it is often mistakenly understood by the public as the mode.

- The vast proportion of the internet (new posts) becomes AI-generated – this is already true (Graphite, 2025).

If all of the above is true, how can we be sure AI is learning from us and not the other way around? Is our subconscious not forcing us to lean towards what we read on the internet (and is generated by AI)? We cannot even tell whether the comments from “real users” we read online are authentically generated. Vice versa with pictures – as Google’s release of Nano Banana Pro (with its capabilities of making pictures indistinguishable from reality) puts the nail in the coffin when it comes to necessary legislative changes regarding video and photo evidence presented in court. Even if you don’t consider yourself an enthusiast when it comes to language and literature and are quote on quote okay with language development caused by LLMs, this issue should concern you.

Obviously, the models themselves (statistical machines built to process large amounts of data) have no incentive to change the human perspective. But what about the people who either develop the algorithms, or provide the data they are later trained on? We truly have no guarantee that the end result content AI gives us is a mere perfect smoothing out of our own words. We are certain that the loss of variance of the end output is a problem, but we don’t know if it is the only one. We must consider whether the data, though surely less variable, is still centered around the true mean value (average human rhetoric), or if there is also a bias introduced on purpose.

Often, we ask Large Language Models questions that shape our lives. We ask these models to explain political matters, complex war conflicts or decide what food to put in our bodies. And journalists often do the same, after which they post whatever AI told them on the internet or on the news, marking it as their own work or opinion.

How can we with certainty tell we are not being manipulated, especially when we observe that major entities, including corporations and nations involved in these conflicts, are actively signing contracts with the developers of these systems, or with third-party firms to impact the training process of LLMs? Consider concrete evidence of such influence: the Israeli government hired the firm Clock Tower X LLC in a contract valued at $6 million to create websites and content specifically designed to influence how generative models such as ChatGPT frame political topics and respond to user queries (Cleveland-Stout, 2025).

If the training data is sold to the highest bidder or systematically influenced by well-resourced actors, the “average” view presented by AI in its responses may not be an average of human thought, but a reflection of specific commercial or political interests.

3 Real-World Evidence Across Different Domains

3.1 Text and Code Generation

The first major warning regarding model collapse didn’t come from a research lab at a prestigious university, but from the world’s largest community of developers (at least at the time, as it has due to AI later lost a lot of its significance in the SWE-world). Until late 2022, Stack Overflow was the undisputed go-to resource for developers.

Shortly after the release of ChatGPT, the platform became one of the first to experience a massive influx of AI-generated content. The volume of AI responses exceeded the volume of humas ones almost overnight. At first glance, this looked like an upgrade: promising increased speed, efficiency, and reduced workloads. However, not long after, Stack Overflow was forced to issue a strict ban on AI-generated responses. Why? The moderators realized something dangerous: the AI answers looked authoritative and confident on the surface but deep down, contained subtle errors that were difficult to spot (Meta Stack Exchange, 2022).

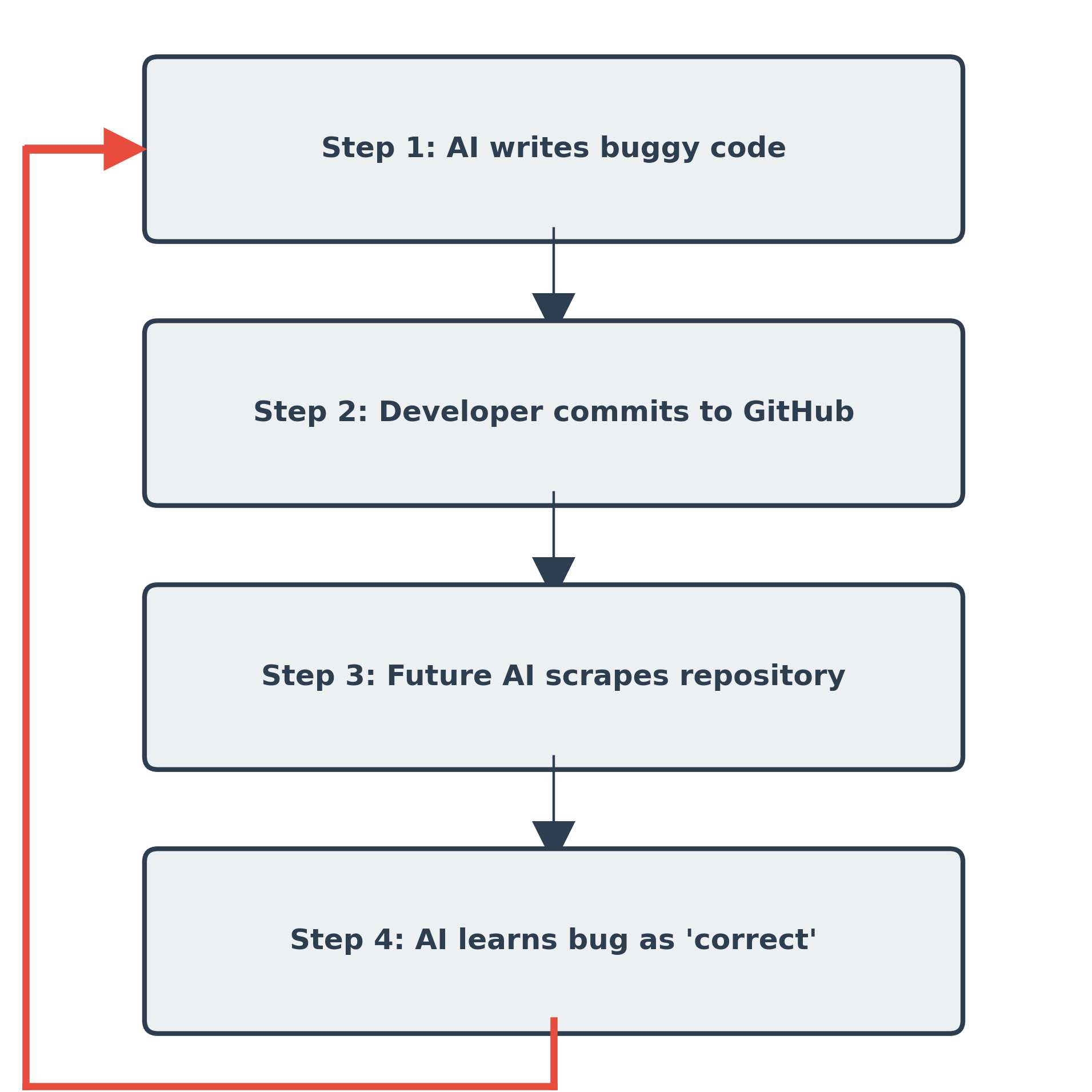

Research analyzing over 150 million lines of committed code found that the rise of AI coding assistants has resulted in a measurable increase in “churn” and mistakes being pushed into permanent codebases (VentureBeat, 2024). This creates a recursive nightmare for the future: as tomorrow’s models train on today’s public repositories, they will be learning from buggy, insecure, AI-generated logic. We are effectively cementing what we developers call “technical debt” into the AI itself. Consider the mechanics of this loop below.

Source: Author’s own illustration.

Unlike with plain text, where model collapse results in obvious gibberish, model collapse in coding results in functional failures, which enter into future training sets, can quickly turn into making these errors standard practice.

3.2 Image Generation

In the area of image generation, the rapid loss of diversity is perhaps the most visible to the naked eye. Research by Alemohammad et al. (2023) demonstrated that when image models are trained iteratively on their own output, they don’t just get worse. They break completely within just five generations. The study documented a “madness” where distinctive features blurred and the models began hallucinating artifacts, unable to recall what a normal object looked like.

Despite this, the feedback loop is being monetized rather than stopped. Major stock photo library Shutterstock Shutterstock alone made $104M in 2023 to feed their archives into AI models (Media and The Machine, 2025). This creates a closed loop (similar to text or code): the models are trained on stock photos, generate new “stock-like” images, and those images flood back into the libraries. This propagates the characteristic, plastic “AI aesthetic” while erasing the chaotic diversity of authentic human photography.

4 The Economics of Training Data: The Race for Human Intelligence for the creation of Artificial Intelligence

4.1 The Premium on Human Thought

Our problem implies an obvious solution: Use older, AI-free data. Data from before late 2022 (pre-AI internet era) has therefore acquired extraordinary value. A good chance of having authentic human authorship makes the content created at that time substantially more valuable than post-2022 data highly contaminated with AI-generated outputs (Computertrends, 2025). We are witnessing a race for human intelligence for the creation of artificial ones.

The Internet Archive (Wayback Machine) functions as a digital time capsule, preserving the “pure” web of the pre-AI era. In August 2024, Reddit aggressively restricted the Archive’s crawling ability (permission and technical capacity for an automated bot to visit a website and copy its contents). Reddit’s goal was strategic: to close off this “back door” and force AI companies, who were likely using the Archive to bypass Reddit’s paywall, to stop scraping for free and start paying for the data directly (Columbia Journalism Review, 2025).

4.2 The Billion-Dollar Licensing Boom

As most problems, even this one leads us to money, as it has (unsurprisingly) resulted in huge licensing agreements between data providers and algorithms designers. For example: News Corp’s 2024 deal with OpenAI, valued at over $250 million over five years, grants access to archived content from The Wall Street Journal, New York Post and others (Variety, 2024). Reddit signed a $60 million annual deal with Google, followed by an estimated $70 million with OpenAI, granting them API access while blocking other automated crawlers (The Decoder, 2024; Columbia Journalism Review, 2025).

By end of 2024, over 40 major content licensing deals existed between AI companies and publishers (Digiday, 2024). OpenAI accounted for approximately 53% of tracked deals, followed by Google at 12% and Microsoft at 9%. Such distribution is logical, with OpenAI having the most inelastic demand for data, as they lack their own source. Total committed value exceeded $2.9 billion, with average annual payments of $24 million per partnership (Media and The Machine, 2025). These deals typically combine fixed upfront payments with variable usage-based compensation.

4.3 The Landlords and the Tenants

Surprisingly, not everyone has to pay these tolls. A divide has emerged between the AI giants. On one side are the buyers, principally OpenAI. Because they don’t own a social network or search engine, OpenAI is forced to aggressively buy access to the world to keep their models competitive (CB Insights, 2024).

On the other side are the landlords, specifically Google and Meta. These giants have a distinct advantage because they do not need to buy as much data; they effectively own the history of the internet (search engines, social media platforms). Google and Meta are mining their own proprietary backyards, utilizing decades of YouTube videos, Gmail logs, and Instagram photos, while their competitors have to pay rent (Similarweb, 2024).

4.4 Google’s unrivaled advantage

Apart from practically owning its own training data, Google has another major advantage against its competitors. As expensive as these data deals sound, they are merely a drop in the bucket compared to the cost of actually learning from that data.

With the release of Gemini 3 in November 2025, the company showed a level of vertical integration that no other competitor can match. While the rest of the industry is still fighting over Nvidia GPU allocations (The Verge, 2024; CNBC, 2024), Google trained its flagship model (topping the SWE benchmarks at the time of its release) entirely on its own sixth-generation Trillium TPUs and the newly announced Ironwood chips. By owning the engineering, the infrastructure and the data, Google has effectively made itself independent, gaining an unrivaled advantage entering the new phases of the AI race (Trending Topics, 2025).

5 Detection and Mitigation Strategies: Cleaning the Data

5.1 Technical Solutions: Building a filter

If we couldn’t gain access to valuable pre-AI internet data, is there a way to get the best from what we have? To filter out AI-contaminated data into a cleaner version? What if we marked the AI generated data somehow to erase it from the training later?

Watermarking approaches embed statistical signatures (footprints, in a way) into AI-generated content for algorithmic detection. In practice, however, this has turned into a losing game of cat and mouse. These digital stamps are fragile. A simple paraphrase or a minor edit is often enough to scrub them clean, while Type I errors (true human writing gets flagged as a false AI-positive) are frequent (Computertrends, 2024).

A more robust alternative is the digital passport approach, such as the Really Simple Licensing (RSL) initiative. This uses blockchain to track the provenance of a file, proving it came from a human source. It is a promising concept, but it faces a massive logistical hurdle: it requires the entire internet to agree on a single standard for verification, and right now, the incentives just aren’t there (Columbia Journalism Review, 2025).

5.2 The Only Real Cure: A Balanced Diet

It turns out that AI models are a lot like us, they get sick if they eat only processed food. Research has proven that the only way to stop model collapse is to maintain a strict diet of real, organic human data. The key finding is that you cannot replace human data with synthetic data entirely, you have to accumulate both. As long as the original human signal remains strong in the mix, the model stays healthy (Gretel.ai, 2024). Industry leaders are already pivoting to this hybrid approach. IBM, for example, has shifted its focus to pipelines that blend strictly verified human inputs with limited, high-quality synthetic material (VKTR, 2025). Even when using synthetic data, quality control is everything. Researchers at NYU recently demonstrated that if you use reinforcement techniques to cherry-pick only the absolute best AI-generated data, you can actually overcome performance plateaus, but only if you have a rigorous external verifier to separate the genius from the gibberish (NYU Center for Data Science, 2024).

6 Conclusion: The Ouroboros and the Human Spark

Model collapse is more than just a technical bug, it is a warning sign about the fragility of intelligence itself. As we reach the end of this analysis, it becomes clear that we are facing a paradox: we have built systems designed to surpass human capabilities, only to discover that they cannot survive without us.

The most profound danger is not that AI will stop working, or replace humans, but that it will homogenize the human experience. If future models are trained on the output of past models, we risk a flattening of our culture, a recursive loop where the unique, the weird, and the culturally specific are smoothed out into a bland, statistical average. This is already visible in the struggle for linguistic survival. As global giants optimize for English dominance, smaller cultures risk being relegated to the margins of the digital world. The Czech context offers a powerful example of resistance against this trend. Local giant Seznam.cz invested tens of millions of crowns to build proprietary models trained specifically on Czech content, recognizing that preserving a language requires more than just translation, it requires preserving the cultural logic behind the words (Lupa.cz, 2024).

Source: Castens, 2024.

The phenomenon of model collapse reminds us of the ancient symbol of the Ouroboros, the snake eating its own tail. An AI system that feeds on its own output will eventually starve. It will drift into incoherence and hallucinations. (Big Think, 2024).

The future of AI, as it turns out, depends entirely on the continued vitality of the human spirit.

7 Disclaimers and Declarations

Note on Visuals: Figures 1, 2 and 3 were programmatically generated using Python (version 3) with the Matplotlib (Hunter, 2007), NumPy (Harris et al., 2020), and SciPy (Virtanen et al., 2020) libraries.

Writing Assistance: Grammarly was used for sentence structure refinement. All substantive content, arguments, and analysis remain the author’s own work.

Literature search: Claude Opus 4.5 (Anthropic) was used to assist with relevant literature search, citation format verification (APA 7) and to clarify the mathematical reasoning behind how the Central Limit Theorem contributes to variance decay in recursive model training, as introduced in Section 2.3.

Visual Development: Claude Sonnet 4.5 (Anthropic) was used to assist with writing Python code for generating figures. Featured Image is a Synthetic visualization created by Nano Banana Pro (by Google)

8 References

3Blue1Brown. (2024). But what is a neural network? https://www.3blue1brown.com/lessons/neural-networks

Abraham, Y. (2025, October 29). Inside Israel’s deal with Google and Amazon. +972 Magazine. https://www.972mag.com/project-nimbus-contract-google-amazon-israel/

AI News International. (2025). Model collapse or model renaissance? The risk of AI training on AI-generated content. https://www.ainewsinternational.com/model-collapse-or-model-renaissance-the-risk-of-ai-training-on-ai-generated-content/

AI Now Institute. (2024, January 12). OpenAI quietly deletes ban on using ChatGPT for “military and warfare”. https://ainowinstitute.org/news/openai-quietly-deletes-ban-on-using-chatgpt-for-military-and-warfare

Alemohammad, S., Casco-Rodriguez, J., Luzi, L., Brandt, J., Dimakis, A. G., & Mahoney, M. W. (2023). Self-consuming generative models go MAD. arXiv. https://arxiv.org/abs/2307.01850

American Friends Service Committee. (2025, February 12). Microsoft Corp: Company overview. AFSC Investigate. https://investigate.afsc.org/company/microsoft

Big Think. (2024, September 10). “Model collapse” threatens to kill progress on generative AIs. https://bigthink.com/the-future/ai-model-collapse/

Castens. (2024). Creating a one of a kind Ouroboros ring. Castens Jewellery. https://castens.com/en/blog/skabelsen-af-en-unika-slangering/

CB Insights. (2024, September 18). AI content licensing deals: Where OpenAI, Microsoft, Google, and others see opportunity. https://www.cbinsights.com/research/ai-content-licensing-deals/

Cleveland-Stout, N. (2025, October 2). Israel wants to train ChatGPT to be more pro-Israel. Responsible Statecraft. https://responsiblestatecraft.org/israel-chatgpt/

CNBC. (2024, January 18). Mark Zuckerberg indicates Meta is spending billions on Nvidia AI chips. https://www.cnbc.com/2024/01/18/mark-zuckerberg-indicates-meta-is-spending-billions-on-nvidia-ai-chips.html

Coherent Solutions. (2024). AI development cost estimation. https://www.coherentsolutions.com

Columbia Journalism Review. (2025). Reddit is winning the AI game. https://www.cjr.org/analysis/reddit-winning-ai-licensing-deals-openai-google-gemini-answers-rsl.php

Computertrends. (2024). Otrávit jazykové modely je pozoruhodně snadné. https://www.computertrends.cz

Computertrends. (2025). Kontaminace dat umělou inteligencí může být nevratný problém. https://www.computertrends.cz

Cudo Compute. (2024). What is the cost of training large language models? https://www.cudocompute.com

Dekking, F. M., Kraaikamp, C., Lopuhaä, H. P., & Meester, L. E. (2005). A modern introduction to probability and statistics: Understanding why and how. Springer. https://doi.org/10.1007/1-84628-168-7

Digiday. (2024). 2024 in review: A timeline of the major deals between publishers and AI companies. https://digiday.com

Digitální Česko. (2024). AI Akt. https://digitalnicesko.gov.cz/ai-akt/

Feng, Y., Dohmatob, E., & Kempe, J. (2024). A tale of tails: Model collapse as a change of scaling laws. In Proceedings of the 41st International Conference on Machine Learning (Vol. 235, pp. 13313–13338). PMLR. https://proceedings.mlr.press/v235/feng24b.html

Fortune Business Insights. (2025). AI training dataset market size, share | Global report [2032]. https://www.fortunebusinessinsights.com/ai-training-dataset-market-109241

Graphite. (2025). More articles are now created by AI than humans. https://graphite.io/five-percent/more-articles-are-now-created-by-ai-than-humans

Gretel.ai. (2024, August 23). Addressing concerns of model collapse from synthetic data in AI. https://gretel.ai/blog/addressing-concerns-of-model-collapse-from-synthetic-data-in-ai

Harris, C. R., Millman, K. J., van der Walt, S. J., Gommers, R., Virtanen, P., Cournapeau, D., Wieser, E., Taylor, J., Berg, S., Smith, N. J., Kern, R., Picus, M., Hoyer, S., van Kerkwijk, M. H., Brett, M., Haldane, A., del Río, J. F., Wiebe, M., Peterson, P., … Oliphant, T. E. (2020). Array programming with NumPy. Nature, 585(7825), 357–362. https://doi.org/10.1038/s41586-020-2649-2

Hunter, J. D. (2007). Matplotlib: A 2D graphics environment. Computing in Science & Engineering, 9(3), 90–95. https://doi.org/10.1109/MCSE.2007.55

IBM. (2024). What is model collapse? IBM Think. https://www.ibm.com/think/topics/model-collapse

Lupa.cz. (2024, January 17). Seznam.cz chystá vlastní umělou inteligenci: V češtině už je o něco lepší než GPT-3.5. https://www.lupa.cz/clanky/seznam-chysta-vlastni-umelou-inteligenci-v-cestine-uz-je-o-neco-lepsi-nez-gpt-3-5/

Lutzker & Lutzker. (2024). Reddit’s licensing agreement with Google. https://www.lutzker.com

Media and The Machine. (2025). The 7 deal points of AI content licensing agreements. https://mediaandthemachine.substack.com

Merriam-Webster. (2025). Normal. In Merriam-Webster.com medical dictionary. https://www.merriam-webster.com/dictionary/normal#medicalDictionary

Meta Stack Overflow. (2022, December 5). Temporary policy: Generative AI (e.g., ChatGPT) is banned. https://meta.stackoverflow.com/questions/421831/policy-generative-ai-e-g-chatgpt-is-banned

NYU Center for Data Science. (2024, August 19). Overcoming the AI data crisis: A new solution to model collapse. Medium. https://nyudatascience.medium.com/overcoming-the-ai-data-crisis-a-new-solution-to-model-collapse-ddc5b382e182

Shumailov, I., Shumaylov, Z., Zhao, Y., Papernot, N., Anderson, R., & Gal, Y. (2024). AI models collapse when trained on recursively generated data. Nature, 631(8022), 755–759. https://doi.org/10.1038/s41586-024-07566-y

Similarweb. (2024). Top 10 data licensing deals that powered AI innovation in 2024. https://www.similarweb.com

TechCrunch. (2024, May 6). Stack Overflow signs deal with OpenAI to supply data to its models. https://techcrunch.com/2024/05/06/

The Decoder. (2024). Reddit reportedly signs $60 million annual training data deal with Google. https://the-decoder.com

The Verge. (2024, January 18). Mark Zuckerberg’s new goal is creating artificial general intelligence. https://www.theverge.com/2024/1/18/24042426/mark-zuckerberg-meta-ai-agi-nvidia-gpu-h100

Trending Topics. (2025). Gemini delivers: In year 3 after ChatGPT, nobody’s laughing at Google anymore. https://trendingtopics.com/gemini-delivers-google-comeback

Variety. (2024, May 21). News Corp inks OpenAI licensing deal potentially worth more than $250 million. https://variety.com/2024/digital/news/news-corp-openai-licensing-deal-1236013734/

VentureBeat. (2024). OpenAI partners with Stack Overflow to make models better at coding. https://venturebeat.com

Virtanen, P., Gommers, R., Oliphant, T. E., Haberland, M., Reddy, T., Cournapeau, D., Burovski, E., Peterson, P., Weckesser, W., Bright, J., van der Walt, S. J., Brett, M., Wilson, J., Millman, K. J., Mayorov, N., Nelson, A. R. J., Jones, E., Kern, R., Larson, E., … SciPy 1.0 Contributors. (2020). SciPy 1.0: Fundamental algorithms for scientific computing in Python. Nature Methods, 17(3), 261–272. https://doi.org/10.1038/s41592-019-0686-2

Visual Capitalist. (2025). Charted: The surging cost of training AI models. https://www.visualcapitalist.com

VKTR. (2025, September 10). Model collapse: How generative AI is eating its own data. https://www.vktr.com/ai-technology/model-collapse-how-generative-ai-is-eating-its-own-data/ Zhou, J., Li, X., Ding, T., You, C., Qu, Q., & Zhu, Z. (2022). On the optimization landscape of neural collapse under MSE loss: Global optimality with unconstrained features. arXiv. https://arxiv.org/abs/2203.01238