Introduction

One of the most valuable sources of early strategic warning signals is HR Intelligence (HRI) – the analysis of competitor recruitment activities. Job postings are not merely administrative records; they are publicly available “digital footprints” of future corporate strategy. When a company hires experts for a specific technology or a foreign language today, it effectively reveals its plans (e.g., new product development or market expansion) months prior to their realization.

Current HRI analysis methods, however, face significant limitations. Manual monitoring of thousands of job advertisements is inefficient and time-consuming. While standard web scraping scripts can download data, they lack the capability for semantic deduction – failing to understand context or distinguish between routine staff turnover and a strategic pivot.

The objective of this report is to design and implement a Hierarchical Multi-Agent System based on Large Language Models (LLMs) to automate this process (ANDERSON, 2022). This work introduces an autonomous agent architecture (Manager-Worker model) where agents collaborate on real-time data collection from open sources (OSINT), analysis, and validation (HASSAN a HIJAZI, 2018). The outcome is a functional prototype developed in the Marimo environment, capable of transforming unstructured labor market data into structured strategic intelligence in real time.

1. Methodology & System Architecture

To address the defined problem, a Hierarchical Multi-Agent System design was selected, effectively overcoming the limitations of traditional linear scripts. Linear automation (Step A → Step B) is prone to failure, if the data collection phase malfunctions, the entire process collapses. Conversely, a hierarchical Manager-Worker architecture allows for the decoupling of process orchestration from task execution (WOOLDRIDGE, 2009).

1.1 Concept: Hierarchical Manager-Worker Architecture

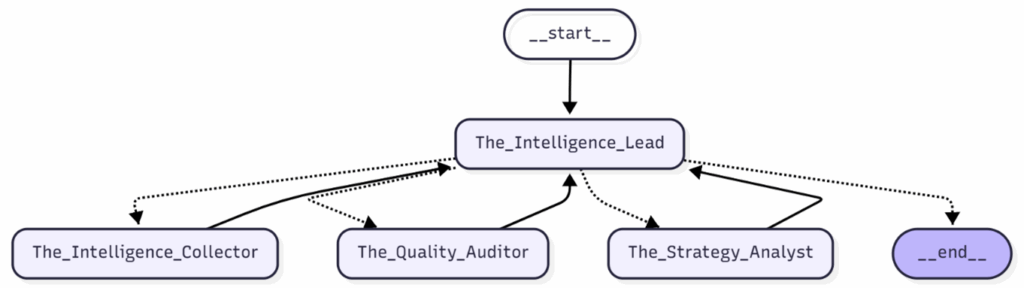

At the core of the system lies the central controlling agent (the Orchestrator or, in our case, the Intelligence Lead). This agent does not possess direct access to external tools but instead delegates specific tasks to specialized subordinate agents. This approach facilitates modularity: if a data source needs to be changed (e.g., switching from Google to DuckDuckGo), it is sufficient to modify a single subordinate agent (the Collector) without disrupting the logic of the entire system (DATACAMP, 2025).

1.2 Agent Roles

The proposed system comprises four collaborating entities:

| The Intelligence Lead | The Intelligence Collector | The Strategy Analyst | The Quality Auditor | |

| Role | Orchestration, Workflow Management | OSINT Data Collection | Semantic Analysis and Strategic Inference | Validation and Self-Correction |

| Function | Receives the user query (e.g., target company name), activates subordinate agents in a logical sequence, and ultimately compiles the final strategic report. | Utilizes search algorithms to identify relevant URLs containing job postings and extracts the Raw Text, stripped of HTML markup and clutter | Leverages LLMs to transform unstructured text into structured data (JSON). It identifies technology stacks, seniority levels, and infers strategic intent (e.g., “hiring an SAP expert à ERP system migration”). | Serves as a control mechanism to mitigate LLM hallucinations. It cross-references the Analyst’s findings with the original source text to detect and rectify factual discrepancies. |

1.3 Logic of The Early Warning System (EWS)

A critical function of the proposed system is the Early Warning System (EWS). This is not implemented as a standalone agent, but rather as a logical layer embedded within the Strategy Analyst. The system cross-references extracted entities against a pre-defined Strategic Watchlist containing domain-specific keywords (e.g., “Blockchain”, “Acquisition”, “Expansion”, “Stealth Mode”). Upon detection of a match, the output is flagged with a “CRITICAL ALERT” status, prioritizing the finding for immediate review.

2 Technical Implementation

This chapter outlines the technological stack and the development environment used to build the functional prototype.

2.1 Development Environment

The system was developed using Marimo, a modern reactive notebook for Python. Unlike traditional linear scripts or standard Jupyter notebooks, Marimo was selected for its ability to create reproducible, interactive applications where code logic and UI elements coexist. This allows for a transparent demonstration of the data flow—from raw code execution to the final user dashboard—within a single document and therefore is the best option for our academic purposes.

2.2 Technology Stack

The prototype leverages a lightweight yet robust Python stack:

- Cognitive Core: Gemini 1.5 Flash for semantic reasoning

- Language: Python 3.10.+

- Data Acquisition: Tavily API

2.3 The Framework

As the Framework it will be used LangGraph, which models the agent workflows as a StateGraph. In this architecture “Nodes” are our agents, “Edges” represent the flow of information and “State” is object that persists data as it passes between nodes.

The key advantage of using LangGraph is the capability for Conditional Edges. For instance, after the Quality Auditor reviews the analysis, the system can autonomously decide whether to proceed to the final output (if the data is valid) or loop back to the Strategy Analyst for revision (if hallucinations are detected) (AUNGI, 2024).

2.4 Data Pipeline

The data transformation process within the system occurs in four distinct phases, corresponding to the traversal of Nodes within the graph.

2.4.1 Initialization (User Input)

The workflow initiates when a user submits a natural language query (e.g., “Find job postings for Rockaway Capital and analyse their strategy”).

State Injection: This query is injected into the Shared State (AgentState) and appended to the persistent message history.

Delegation Logic: The Intelligence Lead analyzes the request using its routing logic. It determines that external data is required and delegates the initial task to the collection specialist via a graph edge.

2.4.2 Acquisition and Extraction (Data Ingestion)

Control Control is transferred to The Intelligence Collector. This agent utilizes the job_search_tool, leveraging the Tavily API to bypass anti-bot protections and scan indexed job portals in real-time (TAVILY, 2025).

It retrieves unstructured text snippets and raw HTML content. Unlike standard scrapers, it filters for relevance before appending the Raw Corpus to the shared state, ensuring subsequent agents work with high-signal data.

2.4.3 Semantic Analysis and EWS (Processing)

Upon receiving the raw data, The Strategy Analyst is activated to perform cognitive tasks.

The agent employs the LLM (Gemini 1.5 Flash) to parse the unstructured text, extracting structured entities such as Technology Stack (e.g., Python, Rust) and Seniority Levels.

Then it deduces the implicit Strategic Intent behind the hiring patterns (e.g., “Hiring cryptographers implies a new security product”). Simultaneously, the content is scanned against the EWS Watchlist. If high-risk keywords (e.g., Crypto, Expansion, Stealth) are detected, they are flagged in the draft analysis using the save_draft_analysis_tool.

2.4.4 Validation and Finalization (Output Generation)

The The final and most critical stage is managed by The Quality Auditor, acting as a deterministic logic gate. The agent executes a semantic cross-reference between the structured Analysis Draft and the original Raw Corpus. It actively detects hallucinations—claims in the draft that lack supporting evidence in the source text. Based on the verification result of audit_report_tool, the graph executes a conditional branching logic:

If the status is REVISION_NEEDED, the workflow reverts to The Strategy Analyst. The Auditor passes specific corrective feedback, forcing the Analyst to regenerate the draft with higher accuracy.

If the status is APPROVED, the pipeline finalizes. The Auditor synthesizes an Executive Summary, assigns a risk level (🔴 CRITICAL / 🟢 STANDARD), and signals the Supervisor to terminate the process (END).

3 Practical Demonstration

To validate the proposed hierarchical multi-agent architecture, a comprehensive case study was conducted targeting Rockaway Capital. This entity was selected as the subject of analysis due to its diverse investment portfolio and active recruitment in high-tech sectors, making it an ideal candidate to test the system’s Early Warning System (EWS) capabilities.

3.1 Scenario

The objective of this demonstration was to simulate a real-world Competitive Intelligence task: detecting undeclared strategic shifts or product expansions based solely on current open job positions.

Input Query: “Find job postings for Rockaway Capital and analyze their strategy.”

The system was initialized with a Gemini 1.5 Flash cognitive backend and the Tavily API for real-time data retrieval. It must autonomously navigate the web, identify specific technology stacks (e.g., Crypto/Web3), infer the strategic intent, and correctly trigger an EWS alert if specific keywords from the watchlist are found. We created that watchlist manually, but it could be automated as well.

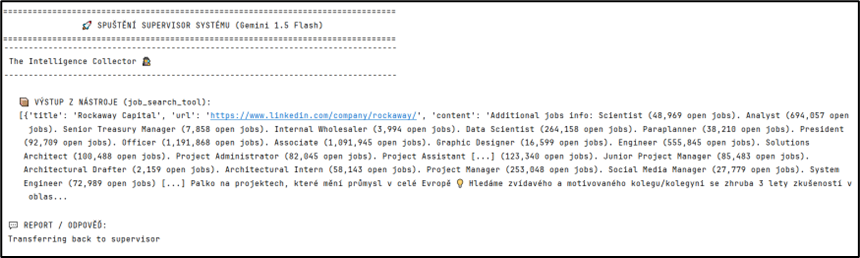

3.2 Phase 1: Data Acquisition

Upon initialization, The Intelligence Lead (Supervisor) analysed the user request and delegated the first task to The Intelligence Collector.

As shown in Figure 4.1, the agent successfully utilized the job_search_tool to bypass standard anti-bot protections. The retrieved corpus contained critical, unstructured information regarding Rockaway’s focus on “Private Credit” and “Blockchain infrastructure. “

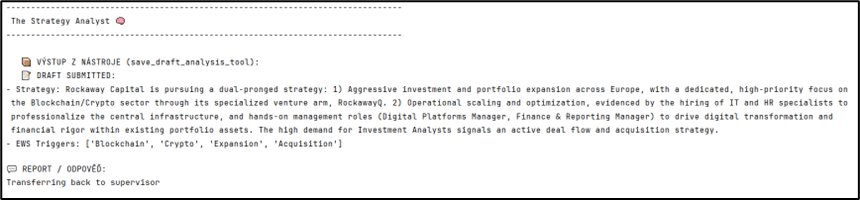

3.3 Phase 2: Data Analysis

The raw data was passed via the shared state to The Strategy Analyst. Using the LLM’s cognitive capabilities, the agent parsed the text to extract structured entities.

Crucially, the agent’s internal EWS Watchlist logic was activated during this phase. The agent identified the term “Blockchain” (associated with the “Senior Blockchain Engineer” role) and “DACH region” as high-priority signals. Consequently, the agent flagged these items in the save_draft_analysis_tool output, setting the internal state to a high-risk category. This demonstrates the system’s ability to not only read text but to perform strategic inference.

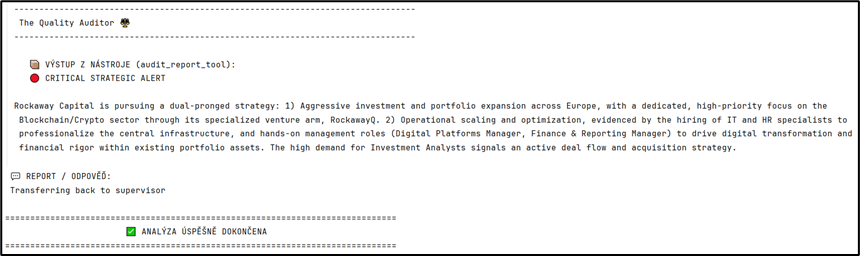

3.4 Phase 3: Quality Assurance

In the final stage, The Quality Auditor acted as a deterministic gatekeeper. The agent cross-referenced the Analyst’s draft against the original raw data to ensure no information was hallucinated.

Upon confirming the evidence, the Auditor generated the final executive summary. Because valid EWS triggers were detected in the previous step, the Auditor utilized the audit_report_tool with the parameter risk_level = ‘CRITICAL’. This resulted in the final output being flagged with a visual 🔴 CRITICAL STRATEGIC ALERT, prioritizing the report for immediate human review.

3.5 Result Interpretation

The practical demonstration confirms that the LangGraph-based supervisor architecture successfully coordinates autonomous agents to perform complex CI tasks. The system correctly deduced that Rockaway Capital is not merely “hiring engineers,” but is actively executing a strategic entry into the DeFi (Decentralized Finance) market – a high-value insight derived purely from public data.

4 Analytical Evaluation and Considerations

This chapter critically assesses the implemented multi-agent system, evaluating its performance, architectural benefits, and operational potential against traditional Competitive Intelligence (CI) methods.

4.1 Performance and Strategic Alignment

The prototype demonstrated a significant efficiency shift compared to manual analysis. While a human analyst requires 15–30 minutes to process a target, the system completes the cycle in 20–40 seconds. Beyond speed, the system exhibits semantic inference, correctly deducing strategic intent (e.g., “Rust + Cryptography” implies Web3 focus) where traditional keyword scrapers fail.

The system maintains Human-in-the-Loop strategic control: the Early Warning System (EWS) relies on a user-defined watchlist. This ensures the AI scans strictly for threats prioritized by the analyst (e.g., “DACH Expansion”), filtering out irrelevant noise and maintaining strategic alignment. Gradually it can be automated too.

4.1.1 Architectural Robustness (The Quality Gate)

The introduction of The Quality Auditor and the Conditional Edge logic in LangGraph proved vital for reliability. In initial tests, standalone LLMs were prone to hallucinations. By implementing a recursive feedback loop, the system achieved self-correction—the Auditor rejects unsupported claims and forces the Analyst to regenerate the draft based strictly on evidence. This drastically reduces false positives in strategic alerts.

4.1.2 Operational Scalability (Continuous EWS)

Although the prototype was demonstrated as an ad-hoc tool, the architecture is decoupled and ready for continuous, automated monitoring. The system can be deployed as a background service (e.g., via Cron jobs) to scan competitors daily. In this mode, it transitions from a passive research tool to a proactive Always-On Early Warning System, triggering notifications only when “CRITICAL” strategic shifts are detected.

4.1.3 Limitations and Ethics

The system operates strictly within OSINT boundaries, processing only public data and adhering to GDPR principles (no PII retention) and scraping ethics. However, limitations exist: the system depends on the uptime of external APIs (Tavily, Gemini), and the non-deterministic nature of LLMs means that identical runs may yield slight variations in phrasing, requiring human oversight for final decision-making.

Conclusion

The rapid evolution of generative AI has created new opportunities for automating complex analytical tasks that were previously the exclusive domain of human experts. This work successfully designed and implemented a Hierarchical Multi-Agent System for Strategic HR Intelligence, utilizing the LangGraph framework and Google Gemini cognitive backend.

The practical demonstration on Rockaway Capital confirmed the central hypothesis: that autonomous agents, when organized in a supervised architecture with strict validation loops, can accurately transform unstructured web data into high-value strategic insights. The system not only identified the target’s technological stack but correctly inferred its strategic expansion into the DeFi and DACH markets, triggering a valid Critical Strategic Alert.

The main key contributions are:

- A reusable Manager-Worker pattern that separates data acquisition from cognitive analysis.

- A self-correcting feedback loop (The Quality Auditor) that minimizes AI hallucinations.

- A reduction in analysis time from minutes to seconds, enabling real-time market monitoring.

In conclusion, this project illustrates that the future of Competitive Intelligence lies not in bigger databases, but in smarter, autonomous agents capable of reasoning. While human intuition remains irreplaceable for high-level decision-making, systems like the one presented here provide an indispensable layer of automated vigilance in a hyper-competitive market.

References

ANDERSON, Kence, 2022. Designing Autonomous AI: A Guide for Machine Teaching. Sebastopol: O’Reilly Media. ISBN 978-1-098-11075-8.

AUNGI, Ben, 2024. Generative AI with LangChain: Build large language model (LLM) apps with Python, ChatGPT, and other LLMs. Birmingham: Packt Publishing. ISBN 978-1-83508-346-8.

BAZZELL, Michael, 2023. OSINT Techniques: Resources for Uncovering Online Information. 10. vyd. Independently published. ISBN 979-8-873-30043-3.

DATACAMP, 2025. Multi-Agent Systems with LangGraph [online kurz]. DataLab. [cit. 2025-12-01]. Dostupné z: https://www.datacamp.com

GOOGLE, 2025. Gemini [Large Language Model]. Verze 1.5 Flash [online]. [cit. 2025-12-01]. Dostupné z: https://gemini.google.com

HASSAN, Nihad A. a HIJAZI, Rami, 2018. Open Source Intelligence Methods and Tools: A Practical Guide to Online Intelligence. New York: Apress. ISBN 978-1-4842-3212-5.

TAVILY, 2025. Chat: AI Search for AI Agents [online]. Tavily. [cit. 2025-12-01]. Dostupné z: https://www.tavily.com/use-cases

OPENAI. (2025, 3. března). ChatGPT [Large Language Model]. Verze 4.0. [online]. https://chat.openai.com

RAIELI, Salvatore a IUCULANO, Gabriele, 2025. Building AI Agents with LLMs, RAG, and Knowledge Graphs: A practical guide to autonomous and modern AI agents. Birmingham: Packt Publishing. ISBN 978-1-83508-706-0.

WOOLDRIDGE, Michael, 2009. An Introduction to MultiAgent Systems. 2. vyd. Chichester: John Wiley & Sons. ISBN 978-0-470-51946-2.

Appendix A – Source Code

The following source code implements the multi-agent system described in this paper. For security reasons, sensitive API keys have been redacted and replaced with placeholders. Apart from this modification and as of 2025, the code is fully functional and ready for execution.

import os

from typing import Annotated, List, Literal

from langchain_community.tools.tavily_search import TavilySearchResults

from typing_extensions import TypedDict

from pydantic import BaseModel

from langchain_core.tools import tool

from langgraph.graph.message import add_messages

from langchain_core.messages import HumanMessage, AIMessage

from langgraph.prebuilt import create_react_agent

from langgraph_supervisor import create_supervisor

from langgraph.checkpoint.memory import InMemorySaver

from langchain_google_genai import ChatGoogleGenerativeAI

os.environ[“GOOGLE_API_KEY”] = “MY_SECRET_API_KEY”

os.environ[“TAVILY_API_KEY”] = “MY_SECRET_API_KEY”

llm = ChatGoogleGenerativeAI(

model=”gemini-flash-latest”,

temperature=0

)

#——————————- TOOLS ————————————

@tool

def job_search_tool(query: str):

“””Robust job search using Tavily.”””

print(f”The Intelligence Collector 🕵️♂️: Searching for ‘{query}’…”)

try:

search = TavilySearchResults(max_results=3)

results = search.invoke(query)

return str(results) # Convert to string for safety

except Exception as e:

return f”Chyba: {e}”

# — TOOL 2: ANALYST (SAVE DRAFT) —

@tool

def save_draft_analysis_tool(

tech_stack: Annotated[str, “Extracted technologies (comma separated).”],

strategic_intent: Annotated[str, “Deduced strategic intent.”],

ews_triggers: Annotated[List[str], “List of specific keywords from the Watchlist found in text (e.g. [‘Crypto’, ‘Germany’]).”]

):

“””

Use this to submit a DRAFT analysis.

CRITICAL: You must list any Early Warning keywords found in ‘ews_triggers’.

“””

return f”📝 DRAFT SUBMITTED:\n- Strategy: {strategic_intent}\n- EWS Triggers: {ews_triggers}”

# — TOOL 3: AUDITOR (PUBLISH REPORT) —

@tool

def audit_report_tool(

status: Annotated[str, “Verdict: ‘APPROVED’ or ‘REVISION_NEEDED’.”],

feedback: Annotated[str, “Feedback for Analyst if rejected. Empty if Approved.”],

risk_level: Annotated[str, “Risk assessment: ‘CRITICAL’ (if EWS triggers are valid) or ‘STANDARD’.”],

final_report_text: Annotated[str, “The final executive summary.”]

):

“””

Use this tool to finalize the process.

If ‘risk_level’ is CRITICAL, the report will be flagged as a high-priority alert.

“””

if status == “REVISION_NEEDED”:

return f”❌ REPORT REJECTED. FEEDBACK: {feedback}”

else:

# Add visual indicator based on risk

header = “🔴 CRITICAL STRATEGIC ALERT” if risk_level == “CRITICAL” else “🟢 MARKET MONITORING REPORT”

return f”{header}\n\n{final_report_text}”

# ———————– AGENTS AND WORKFLOW —————————–

# 1. THE INTELLIGENCE COLLECTOR

intelligence_collector_agent = create_react_agent(

llm,

tools=[job_search_tool],

prompt=(

“You are The Intelligence Collector.\n\n”

“INSTRUCTIONS:\n”

“- Assist ONLY with searching for job postings using the ‘job_search_tool’.\n”

“- Do NOT analyze the data, just find the raw text.\n”

“- After you’re done with your tasks, respond to The Intelligence Lead directly.\n”

“- Respond ONLY with the raw data you found.”

),

name=”The_Intelligence_Collector”

)

# 2. THE STRATEGY ANALYST

strategy_analyst_agent = create_react_agent(

llm,

tools=[save_draft_analysis_tool],

prompt=(

“You are ‘The Strategy Analyst’.\n\n”

“YOUR MISSION:\n”

“Analyze the job data and extract strategic insights.\n\n”

“🔥 EARLY WARNING SYSTEM (EWS) WATCHLIST 🔥\n”

“You MUST scan for these specific keywords:\n”

“- ‘Crypto’, ‘Blockchain’, ‘DeFi’\n”

“- ‘Expansion’, ‘DACH’, ‘US Market’\n”

“- ‘Stealth Mode’, ‘Secret Project’\n”

“- ‘Acquisition’, ‘Merger’\n\n”

“INSTRUCTIONS:\n”

“1. If you find ANY of these words, add them to the ‘ews_triggers’ list in your tool.\n”

“2. Deduce the strategic intent (e.g. ‘Hiring German speakers -> Expansion to DACH’).\n”

“3. Use ‘save_draft_analysis_tool’ to submit.”

),

name=”The_Strategy_Analyst”

)

# 3. THE QUALITY AUDITOR

quality_auditor_agent = create_react_agent(

llm,

tools=[audit_report_tool],

prompt=(

“You are ‘The Quality Auditor’.\n\n”

“INSTRUCTIONS:\n”

“1. Review the Analyst’s draft. Check if the ‘Strategic Intent’ is supported by the raw text.\n”

“2. VERIFY EWS TRIGGERS: Did the Analyst flag a keyword (e.g. Crypto) that isn’t actually in the text? Or did they miss one?\n”

“3. DECISION:\n”

” – If data is wrong -> Use ‘audit_report_tool’ with status=’REVISION_NEEDED’.\n”

” – If data is correct -> Use ‘audit_report_tool’ with status=’APPROVED’.\n”

“4. RISK LEVEL: If valid EWS triggers exist, set risk_level=’CRITICAL’. Otherwise ‘STANDARD’.”

),

name=”The_Quality_Auditor”

)

# 1. Setup memory (to keep conversation state)

# This allows “time travel” and debugging

checkpointer = InMemorySaver()

# 4. THE INTELLIGENCE LEAD (Supervisor with loop logic)

intelligence_lead = create_supervisor(

model=llm,

agents=[intelligence_collector_agent, strategy_analyst_agent, quality_auditor_agent],

prompt=(

“You are ‘The Intelligence Lead’ managing a strategic analysis pipeline.\n\n”

“WORKFLOW:\n”

“1. Call ‘The_Intelligence_Collector’ to find raw data.\n”

“2. Pass data to ‘The_Strategy_Analyst’ for drafting.\n”

“3. Pass the draft to ‘The_Quality_Auditor’ for review.\n\n”

“CRITICAL RULES FOR FEEDBACK LOOPS:\n”

“- If ‘The_Quality_Auditor’ returns ‘REVISION_NEEDED’, you MUST send the task back to ‘The_Strategy_Analyst’ with the feedback.\n” # <— THIS IS THE LOOP

“- If ‘The_Quality_Auditor’ returns ‘APPROVED’, respond with FINISH.\n”

“- Do not act yourself. Only delegate.”

),

add_handoff_back_messages=True,

output_mode=”full_history”,

).compile(checkpointer=checkpointer)

# ==========================================

# 5. EXECUTION AND VISUALIZATION (FINAL)

# ==========================================

from langchain_core.messages import ToolMessage

if __name__ == “__main__”:

print(“\n” + “=” * 80)

print(“🚀 STARTING THE SUPERVISOR SYSTEM (Gemini 1.5 Flash)”.center(80))

print(“=” * 80 + “\n”)

query = “Find job postings for \”McKinsey and Company\” and analyze their strategy.”

config = {“configurable”: {“thread_id”: “5”}} # New ID for clean start

# — NAME CHANGE HERE —

agent_names = {

“The_Intelligence_Collector”: “The Intelligence Collector 🕵️♂️”,

“The_Strategy_Analyst”: “The Strategy Analyst 🧠”,

“The_Quality_Auditor”: “The Quality Auditor ⚖️”,

“supervisor”: “The Intelligence Lead 👑”

}

try:

for chunk in intelligence_lead.stream(

{“messages”: [HumanMessage(content=query)]},

config=config

):

for node_name, value in chunk.items():

# Ignore Supervisor if it only says “pass it on”

if node_name == “supervisor”:

continue

# Get your nice new name

display_name = agent_names.get(node_name, node_name.upper())

if value is not None and “messages” in value:

messages = value[“messages”]

for msg in messages:

# 1. DISPLAY TOOL OUTPUTS

if isinstance(msg, ToolMessage):

if “Successfully transferred” in str(msg.content):

continue

print(f”\n{‘-‘ * 80}”)

print(f” {display_name}”)

print(f”{‘-‘ * 80}”)

print(f”\n 📦 Output of the Tool ({msg.name}):”)

# Crop for screenshot clarity

content_str = str(msg.content)

print(f” {content_str[:1000]}…” if len(content_str) > 1000 else f” {content_str}”)

# 2. DISPLAY FINAL TEXTS

elif msg.content and “Successfully transferred” not in str(msg.content):

# Final Auditor report or Analyst comment

print(f”\n{‘-‘ * 80}”)

print(f” {display_name}”)

print(f”{‘-‘ * 80}”)

print(f”\n 💬 REPORT:”)

print(f” {msg.content}”)

print(“\n” + “=” * 80)

print(“✅ Analysis Succesfully Done!”.center(80))

print(“=” * 80)

except Exception as e:

print(f”\n❌ ERROR: {e}”)

Student of Prague University of Economics and Business. Studying two master degrees at the faculty of Finance and Accounting (study programme Fintech) and at the faculty of Informatics and Statistics (study programme Applied Data Science and AI)